- Mimicking the auditory principles of bats and dolphins, the technology enables sound-based recognition of human and object positions

- A human-robot interaction technology operable in noisy environments, applicable to disaster rescue and smart factories

- Research findings published in the international journal Robotics and Computer-Integrated Manufacturing

▲ (From left) Professor Sung-Hoon Ahn (corresponding author), PhD candidate Semin Ahn (first author), Master’s student Jae-Hoon Kim (co-author), and PhD candidate Jun Heo (co-author), all from the Department of Mechanical Engineering at Seoul National University

Seoul National University College of Engineering announced that Professor Sung-Hoon Ahn's team from the Department of Mechanical Engineering has developed a novel auditory technology that allows the recognition of human positions using only a single microphone. This technology facilitates sound-based interaction between humans and robots, even in noisy factory environments.

The research team has successfully implemented the world's first 3D auditory sensor that "sees space with ears" through sound source localization and acoustic communication technologies.

The research findings were published on January 27 in the international journal Robotics and Computer-Integrated Manufacturing.

In industrial and disaster rescue settings, "sound" serves as a crucial cue. Even in situations where visual sensors or electromagnetic communication are rendered ineffective due to high temperatures, dust, smoke, darkness, or obstacles, sound waves can still convey vital information. However, existing acoustic sensing technologies have limitations in accuracy or require complex equipment configurations, making practical industrial applications challenging. Consequently, sound has not been fully utilized as a sensing resource despite its potential.

Particularly in high-noise environments like factories, advanced acoustic sensing technologies are needed, as accurately identifying human positions or enabling robots to recognize verbal commands is extremely difficult. Moreover, traditional communication methods face challenges in environments lacking network infrastructure, highlighting the necessity for new robot-to-robot communication technologies utilizing sound.

Addressing these fundamental issues, the research team developed the world's first meta-structure-based 3D auditory sensor capable of position recognition using only a single sensor. This sensor integrates two core technologies: a "3D acoustic perception technology" that estimates the 3D positions of humans or objects even in noisy environments, and a "sound wave-based dual communication technology" that enables new interaction methods between humans and robots, as well as between robots.

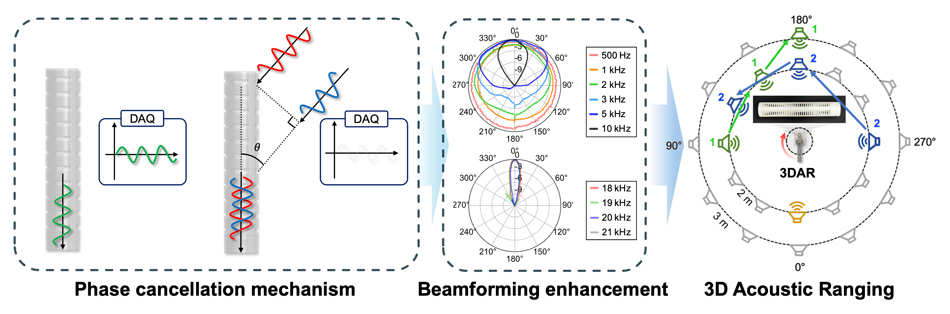

▲ (From left) Operating principle of the phase cancellation mechanism using an acoustic metastructure, enhancement of single-sensor directivity (beamforming performance), and 3D acoustic detection via rotation (3DAR)

Professor Ahn's team focused on the biological mechanisms of bats and dolphins, which recognize their surroundings and communicate solely through sound. They particularly aimed to engineer the auditory ability to "selectively listen to sounds from specific directions," enabling the isolation of desired sounds amidst complex noise. To achieve this, they designed a meta-structure-based phase cancellation mechanism that artificially adjusts the phase of sound waves arriving from different paths, amplifying sounds from specific directions while canceling out others. By combining this mechanism with a single microphone and a rotational device, the team implemented 3D sound source tracking functionality—previously achievable only with multi-sensor systems—into a single sensor. They named this system "3DAR (3D Acoustic Ranging).“

▲ 3DAR (3D Acoustic Ranging) developed by Professor Sung-Hoon Ahn’s research team at Seoul National University's Department of Mechanical Engineering

Additionally, inspired by dolphins' dual-frequency communication principles, the researchers designed a dual acoustic channel separating audible and inaudible frequency ranges. This structure allows humans and robots to communicate using audible frequencies (sounds humans can hear), while robots communicate among themselves using inaudible frequencies (sounds humans cannot hear). This design minimizes interference and provides independent communication paths between robots, facilitating more complex collaborative scenarios in industrial settings.

These two technologies are integrated into a single "meta-structure 3D auditory sensor system," which the research team successfully implemented on an actual robot platform. Field tests were conducted in factory and everyday environments. Notably, a quadruped robot equipped with this system successfully interacted with humans through sound and detected gas leak locations via sound (refer to the video).

The technology developed in this research is anticipated to be widely applicable in various fields, including tracking worker positions within factories, enabling voice-based human-robot collaboration, and assisting robots in recognizing and responding to human rescue calls during disasters. Furthermore, the sensor's low cost and compact design compared to existing systems make it readily deployable in industrial settings.

Its utility is particularly expected to be high in cell-based autonomous manufacturing plants. By leveraging this technology, real-time tracking of worker positions can prevent collisions with robots, and communication with robots through sound alone—without gestures or buttons—can enhance workers' physical freedom, enabling more efficient collaboration. Additionally, robot-to-robot communication via sound, without relying on traditional networks, allows for flexible and organic coordination among multiple robots without complex communication infrastructure.

The technology is also projected to be valuable for 24-hour unmanned factory monitoring. It can automatically detect and locate sounds indicative of pipe leaks, machinery anomalies, or worker accidents, enabling immediate responses. Moreover, due to its low-cost and compact design based on a single sensor, the system possesses versatility for easy adoption in other industrial sites moving towards automation.

Professor Sung-Hoon Ahn emphasized the potential of auditory technology, stating, "Unlike electromagnetic waves used in traditional communication technologies, which are obstructed by walls or obstacles, sound can pass through narrow gaps and be heard, making it a promising medium for new interaction methods.“

Doctoral candidate Semin Ahn, the lead author of the paper, reflected on the research process: "Previously, determining positions using sound required multiple sensors or complex calculations. Developing a 3D sensor capable of accurately locating sound sources with just a rotating single microphone opens new avenues in acoustic sensing technology.“

Semin Ahn, a doctoral candidate at Seoul National University's Innovative Design and Integrated Manufacturing Lab, is currently researching the development of an "Acoustic Band-Pass Filter" based on intelligent structures. This technology aims to selectively capture specific frequency sounds even in high-noise environments. The future plan involves enhancing the 3DAR system into a more advanced robotic auditory system, integrating it with large language model (LLM)-based cognitive systems to enable robots to understand the meaning of sounds like humans, and applying this to humanoid robots.

▲ (From left) Professor Sung-Hoon Ahn (corresponding author), PhD candidate Semin Ahn (first author), PhD candidate Jun Heo (co-author). and Master’s student Jae-Hoon Kim (co-author), all from the Department of Mechanical Engineering at Seoul National University

[Reference Materials]

- Paper/Journal : ‘Human-robot and robot-robot sound interaction using a 3-Dimensional Acoustic Ranging (3DAR) in audible and inaudible frequency‘, Robotics and Computer-Integrated Manufacturing

- DOI : https://doi.org/10.1016/j.rcim.2025.102970

[Contact Information]

Professor Sung-Hoon Ahn, Innovative Design and Integrated Manufacturing Lab, Department of Mechanical Engineering, Seoul National University / +82-2-880-9073 / ahnsh@snu.ac.kr